Albums |

Posted by Spice on February 22, 2026

The Oregon Spin: A Brief History of Online Roulette

In the early 2000s, broadband became a household name in Oregon. At the same time, the first online roulette sites appeared, offering the casino experience without roulette in North Dakota (ND) leaving home. A 2005 court ruling loosened the state’s strict anti?gambling stance, allowing limited online betting. Since then, Oregon’s online roulette has grown from a niche pastime to a sophisticated ecosystem.

The latest software update enhances graphics for online roulette oregon (OR) players: oregon-casinos.com. Today, regulation and technology coexist. Operators must prove fairness through certified RNGs and annual eCOGRA audits, maintain financial transparency, and enforce age checks. In 2023, a pilot program granted provisional licenses to a handful of operators, testing a “limited?market” model before full regulation. Those platforms already accept local payments like Apple Pay, Venmo, and cryptocurrencies such as Bitcoin and Ethereum.

| Year | Event | Impact |

|---|---|---|

| 2018 | First online roulette license | Opened regulated play |

| 2020 | eCOGRA audits introduced | Boosted confidence |

| 2022 | Pilot program launched | Limited?market test |

| 2024 | Crypto payments added | Broader access |

| 2025 | Full licensing expected | Market expansion |

These milestones show Oregon’s gradual, cautious approach to online roulette.

Desktop vs Mobile Experience

Imagine a Portland evening: your laptop displays a high?resolution roulette table, and you can keep a strategy guide open side?by?side. Desktop offers a wide view and more room for multitasking. In contrast, a Seattle coffee shop call? You tap a button on your phone, and the table collapses into a touch?friendly layout. Buttons enlarge, a “quick spin” feature saves time, and you can play anywhere.

| Feature | Desktop | Mobile |

|---|---|---|

| Graphics | Ultra HD | HD |

| Latency | < 100 ms | < 150 ms |

| Bet Placement | Mouse/Keyboard | Touch |

| Multi?Tasking | High | Moderate |

| Portability | Low | High |

Mobile usage rose 35% over the past two years. Many still choose desktop for longer sessions that involve complex betting systems.

Live Dealer Roulette

Live dealer games bring a human touch to the digital world. Licensed Oregon operators invest in studio quality: 1080 p cameras, soundproof booths, and redundant internet. Players see the dealer’s hand, hear commentary, and feel the suspense of a real ball roll. In 2023, live dealer roulette attracted a 22% increase in daily active users.

Key elements:

- Camera – 1080 p or better, minimal buffering.

- Audio – Clear voice, no background noise.

- Interface – Responsive to touch or mouse.

- Fairness – RNG backup for audit.

- Angles – Optional close?ups.

Visit https://goo.gl/ for the latest promotions on casino games. The visible betting process reduces skepticism compared to purely software?based games.

Bankroll Management

A structured bankroll plan protects against emotional swings. Oregon players use several systems:

| Strategy | Risk | Ideal Bankroll | Typical Return |

|---|---|---|---|

| Flat | Low | $500+ | Stable |

| Martingale | Medium | $1 000+ | High potential |

| Fibonacci | Medium | $800+ | Balanced |

| D’Alembert | Low | $600+ | Consistent |

Many combine Martingale with a stop?loss limit to avoid large losses. Separating a “fun fund” from disciplined betting money can improve long?term results.

Bonuses & Promotions

Competition fuels creative offers. Welcome bonuses often range from 100% to 200% of the first deposit, with wagering requirements. Loyalty programs award points per dollar wagered, redeemable for cashbacks, free spins, or VIP perks.

| Operator | Welcome Bonus | Wagering Req. | Loyalty Tier | Redemption |

|---|---|---|---|---|

| OregonCasinoX | 150% up to $500 | 30x | Gold | Cashback, Free Spins |

| LuckyRoulette | 200% up to $300 | 25x | Silver | Free Spins, VIP Access |

| CascadeBet | 100% up to $400 | 35x | Platinum | Cashback, Tournaments |

Promotions help new players test strategies before risking their own money.

Responsible Gaming

The Oregon Lottery Commission requires deposit limits, time?out features, and reality checks. Operators also partner with charities and addiction services. In 2024, a public?service campaign highlighted problem?gambling signs. These safeguards aim to reduce harm while keeping the wheel spinning safely.

Emerging Trends

Virtual Reality

Affordable headsets let players step into a 3?D casino. VR roulette lets you walk around the table, examine the wheel, and chat with other avatars. Early feedback praises immersion, though the learning curve can be steep.

| Feature | Description | Feedback |

|---|---|---|

| 3D Table | Full?360° view | “Feels real.” |

| Hand Tracking | Motion controls | “Natural.” |

| Social | Chat with others | “Adds a layer.” |

Blockchain

Smart contracts automate payouts and record bets on a public ledger, ensuring transparency. A blockchain?based system in Oregon offers instant withdrawals and tokenized loyalty points that can be used across platforms.

Expert Views

Dr. Maya Patel, iGaming researcher, says Oregon’s regulated market is ready for rapid growth. The state’s balanced framework and tech adoption could push the market past $120 million by 2027. Jonas Lee notes that local payment options – Apple Pay, Venmo, Bitcoin – give Oregon operators an edge over international sites.

Choosing the Right Platform

Select a platform that matches your preferences: live dealer realism, mobile convenience, or VR excitement. Check licensing, security, and bonus terms before you bet. Oregon’s regulated market provides many options, so explore, compare, and find what suits you best.

What do you think? Are you leaning toward live dealer or mobile play? Let us know in the comments below!

Albums | How a Wallet Can Fight MEV: Practical Simulation, Routing, and Real-World Trade Protection

Posted by Spice on February 5, 2026

Whoa! I was staring at a pending transaction on mainnet. My heart skipped a beat for a second. Somethin’ felt off about the gas spike. Initially I thought it was a fluke, but then I noticed a pattern across the mempool that suggested sophisticated extraction tactics were at play.

Seriously? MEV—maximal extractable value—has been whispered about in every DeFi chat. People talk like it’s an invisible tax on users. On one hand it feels inevitable. On the other hand, examining specific block-level traces and sandwich attempts reveals that much of this “inevitability” is driven by tooling choices and poor transaction design rather than some immutable law.

Hmm… My instinct said the wallet needed to do more than just prompt for gas. Wallet UX often hides the risk. That bugs me. Actually, wait—let me rephrase that: the wallet needs to simulate transaction effects, reveal slippage paths, and show possible MEV opportunities in a way a human can act on before they hit send, otherwise users are flying blind.

Here’s the thing. Simulation is not a luxury. It’s a security practice. Developers and sophisticated users use it routinely. When a wallet can replay a transaction against a tipped mempool and show whether frontruns or reorgs could flip a trade, that wallet has moved from passive signer to active protector.

Wow! Transaction simulation can reveal hidden slippage and gas inefficiencies. It can also surface whether your trade creates a sandwich target, which is very very nasty. Not all simulations are equal though. High-fidelity simulation requires access to mempool state, realistic miner behavior models, and an ability to re-evaluate state after pending transactions are inserted or dropped, which is both computationally and infrastructurally expensive.

My instinct said do this. Trusted relayers and private transaction queues alter the dynamics a lot. They can sidestep public mempools and reduce attack surfaces. Yet they introduce centralization trade-offs. Balancing privacy and decentralization is a design tension—opt for a private relay and you mitigate many sandwich risks, though you now rely on another actor to behave honestly under load…

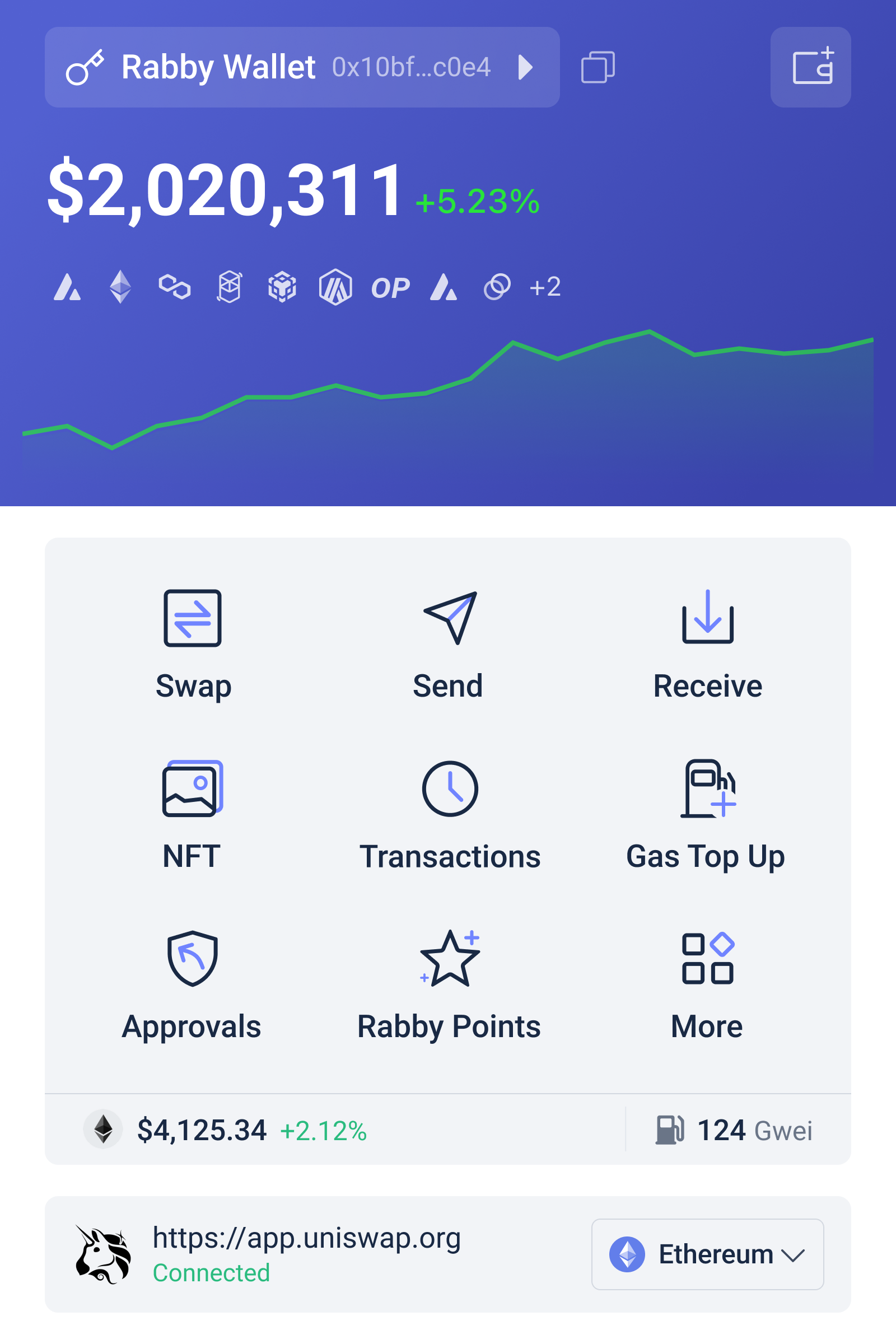

I’ll be honest… Rabby-style wallets that focus on transaction simulation and easy-to-read risk signals help everyday users. They translate complex blockchain mechanics into actionable prompts. That translation matters. For example, flagging that a swap will likely be sandwiched within the next few blocks gives a user a real decision point: resubmit with slippage, route via a DEX aggregator, or split the trade.

Okay, so check this out— I ran a test where I simulated a large swap across Uniswap v3 pools. The simulator showed a potential MEV extraction of several percent. That would have eaten a big chunk of the expected return. Initially I thought adjusting gas would be sufficient, but the simulation showed that rerouting through a less liquid pool and using a different tick range reduced exposure more effectively than simply raising gas (oh, and by the way, that was a back-of-the-napkin insight that turned out true on deeper analysis).

I’m not 100% sure, but sometimes a simple nonce or timing tweak can avoid being targeted. Other times the ecosystem’s automatic bots adapt quickly. So no one-size-fits-all fix exists. On the protocol level, adjustments like batch auctions, fee markets, or proposer-builder separation can materially change MEV economics, but those solutions require coordination among validators, builders, and users which is slow and complex.

Seriously? DeFi protocols have tools too. Flashbots has advanced research and tooling aimed at building private relays. Yet integration is uneven across wallets and dApps. That’s why embedding simulation and MEV-aware routing into the wallet, coupled with optional private-send features and clear user prompts, creates a powerful front-line defense that operates independently of slow-moving protocol governance.

Want to see it in practice?

If you want to see an example of a wallet that prioritizes transaction simulation and clear risk signals, check out https://rabby-wallet.at/ — it shows how these ideas look in a product focused on protecting users while keeping UX sane.

Wow! I came away with a clearer view of practical defense strategies. On one hand invasive front-running bots are a pain. On the other hand the right wallet tooling nudges users away from disaster. I still have open questions about UX friction—how aggressive should automated mitigation be before it annoys power users, and how transparent must it remain so regulators and auditors can verify behavior?

Hmm… If you care about protecting trades, test your wallet’s simulation features. Try routing options, toggles for private relays, and look for explicit MEV warnings. A little effort up front can mean big savings later. If you do some back-of-the-envelope testing you’ll see the difference in slippage and realized returns, and yeah, you might save yourself some very very avoidable headaches.

FAQ

How does simulation differ from a dry run?

Whoa! FAQ time. How does simulation differ from a dry run? Simulation models chain state and pending mempool interactions. A dry run is often limited to node mempool validation and may not model adversarial actors inserting transactions faster than miners publish blocks.

Can wallets eliminate MEV entirely?

Seriously? Can wallets eliminate MEV entirely? No. They can reduce risk and improve decisions though. Combining wallet-level simulation, optional private relay submission paths, and protocol-level reforms is the pragmatic path forward, not a single silver bullet.

Albums | Jeux en mode démo gratuit sur Instant Casino

Posted by Spice on January 30, 2026

Le secteur du jeu en ligne connaît une croissance exponentielle, offrant aux joueurs une variété impressionnante d’options de divertissement. Parmi celles-ci, les jeux en mode démonstration occupent une place de choix, permettant aux utilisateurs de tester les jeux sans risquer d’argent réel. Cette approche présente de nombreux avantages, notamment la possibilité d’apprendre les règles, de découvrir les stratégies et d’apprécier l’expérience du jeu sans pression.

Pour ceux qui souhaitent explorer l’univers des casinos en ligne en toute sécurité, Instant Casino gratuit propose une plateforme idéale où il est possible de jouer à de nombreux jeux en mode démo. Que ce soit pour les jeux de machines à sous, le blackjack ou la roulette, cette plateforme offre une expérience réaliste et conviviale, permettant de se familiariser avec le gameplay en toute simplicité.

Les jeux en mode démonstration sont également un excellent moyen de tester différents titres et de comparer leurs fonctionnalités avant de jouer avec de l’argent réel. Ils constituent une étape essentielle pour développer ses compétences, augmenter ses chances de succès et profiter pleinement de l’univers du casino en ligne sans aucun risque.

Découverte des jeux en mode démo sur Instant Casino gratuit

Le mode démo sur Instant Casino gratuit permet aux joueurs de découvrir une large gamme de jeux de casino sans risquer d’argent réel. Ceci est une excellente opportunité pour les débutants qui veulent apprendre les règles et stratégies des différents jeux. De plus, les joueurs expérimentés peuvent tester de nouvelles machines à sous ou jeux de table avant de jouer en mode réel.

Grâce à cette fonction, il est possible d’explorer l’interface du casino, de se familiariser avec les fonctionnalités et de s’amuser sans pression financière. Les jeux en mode démo sont généralement accessibles directement depuis le site web ou l’application mobile, offrant ainsi une expérience fluide et conviviale pour tous les utilisateurs.

Les avantages du mode démo sur Instant Casino gratuit

- Apprentissage des règles des jeux

- Test de stratégies sans risque

- Découverte de nouveaux jeux

- Pratique avant de jouer avec de l’argent réel

Il est essentiel pour chaque joueur de profiter de cette option pour augmenter ses chances de succès et de divertissement. En utilisant le mode démo, on peut également mieux comprendre le fonctionnement des bonus et des jackpots proposés par le casino.

Comment accéder aux variantes de jeux en mode simulation sans inscription

Pour profiter pleinement des différentes variantes de jeux sur Instant Casino en mode démo, il n’est pas nécessaire de créer un compte ou de s’inscrire. Cette option permet aux joueurs d’explorer et de pratiquer sans engagement, ce qui est idéal pour apprendre les règles et tester différentes stratégies. Le mode simulation est accessible directement depuis le site, facilitant ainsi l’accès instantané à une large gamme de jeux.

Voici comment accéder aux variantes de jeux en mode démo sans inscription :

Étapes pour jouer en mode démo sans inscription

- Choisir un site de casino en ligne fiable : Sélectionnez une plateforme reconnue qui propose une fonctionnalité de jeux en mode démo gratuite.

- Se rendre à la section “Jeux gratuits” ou “Mode démo” : La plupart des casinos mettent en évidence cette option sur leur page d’accueil ou dans la section dédiée aux jeux.

- Sélectionner le jeu désiré : Parcourez la liste des jeux disponibles, comme les machines à sous, la roulette ou le poker.

- Lancer le mode démo : Cliquez simplement sur le jeu choisi pour lancer la version de démonstration, qui utilise généralement une monnaie virtuelle sans besoin d’inscription.

- Explorer et pratiquer : Utilisez cette opportunité pour découvrir toutes les variantes et améliorer votre maîtrise.

| Avantages du mode démo sans inscription |

|---|

|

Les avantages de tester la roulette virtuelle sans mise réelle

La possibilité de jouer à la roulette virtuelle en mode démo offre aux joueurs une occasion unique d’explorer le jeu sans risque financier. Cela permet de mieux comprendre les règles, les options de mise et le fonctionnement général du jeu sans la pression de perdre de l’argent réel.

De plus, cette méthode de test facilite la prise de confiance pour les débutants, leur permettant ainsi d’acquérir de l’expérience et d’élaborer des stratégies avant de jouer avec de l’argent réel. C’est une étape essentielle pour maîtriser la roulette en toute sécurité.

Les principaux avantages de la roulette démo

- Apprentissage sans risque : Jouer sans mise réelle permet d’apprendre les règles et les différentes options de jeu sans danger.

- Test des stratégies : Les joueurs peuvent expérimenter diverses stratégies et voir lesquelles fonctionnent le mieux sans perdre d’argent.

- Gain en confiance : La pratique régulière prépare les joueurs à jouer en mode réel avec une meilleure compréhension du jeu.

- Découverte des fonctionnalités : La version démo permet d’explorer toutes les options et fonctionnalités disponibles dans la roulette virtuelle.

Stratégies pour maîtriser les machines à sous en mode démo avant de jouer en argent fictif

Avant de se lancer dans une partie en argent fictif sur Instant Casino, il est essentiel de s’entraîner avec la version démo des machines à sous. Cela permet de comprendre le fonctionnement de chaque jeux, d’apprendre à gérer son budget virtuel et d’identifier les particularités des différents types de machines. La maîtrise de ces aspects augmente les chances de succès lorsque vous passerez à une mise en argent réel.

La première étape consiste à se familiariser avec les règles et les fonctionnalités spécifiques de chaque machine. En mode démo, vous pouvez tester différentes stratégies sans risquer de perdre de l’argent réel, ce qui offre une opportunité d’apprentissage précieuse. Il est également conseillé d’observer les bonus et les fonctionnalités spéciales, telles que les tours gratuits ou les multiplicateurs, pour optimiser votre jeu futur.

Principales stratégies pour maîtriser les machines à sous en mode démo

- Étudier les taux de redistribution (RTP) : Choisir des machines avec un RTP élevé pour maximiser vos chances à long terme.

- Gérer son budget virtuel : Définir une limite de mises pour éviter de perdre rapidement tous les crédits virtuels.

- Tester différentes stratégies de mise : Alterner entre mises faibles et fortes pour observer l’effet sur la fréquence des gains.

- Prendre des notes : Noter les machines qui offrent des bonus réguliers ou de meilleures performances.

Enfin, il est conseillé de suivre un processus systématique lors de l’essai des machines démo, en ne restant pas trop longtemps sur une seule machine. Expérimenter plusieurs jeux permet de mieux comprendre leurs particularités et de développer une approche adaptée avant de jouer avec de l’argent fictif ou réel.

Utilisation des fonctionnalités interactives pour améliorer ses performances dans le mode démo

Dans le contexte des jeux en mode démo sur Instant Casino gratuit, il est essentiel d’exploiter pleinement les fonctionnalités interactives proposées pour optimiser ses résultats et développer ses compétences. Ces outils permettent aux joueurs de mieux comprendre le fonctionnement des jeux, d’expérimenter différentes stratégies et d’adapter leur approche en fonction des situations rencontrées.

En utilisant ces fonctionnalités, comme les sessions d’entraînement, les tutoriels intégrés ou encore les statistiques en temps réel, les utilisateurs peuvent affiner leur technique et augmenter leur confiance lors de jeux réels. Ces éléments favorisent également l’apprentissage de la gestion de bankroll et la reconnaissance des modèles de jeu, clés pour performer dans l’univers des casinos en ligne.

Améliorer ses performances grâce aux fonctionnalités interactives

- Utilisation des tutoriels intégrés: Ces guides expliquent les règles, stratégies et astuces pour différents jeux, permettant aux débutants d’acquérir rapidement des compétences de base.

- Analyse des statistiques: La consultation des données sur ses sessions permet d’identifier ses forces et faiblesses, d’ajuster ses stratégies et d’éviter les erreurs répétitives.

- Simulations et sessions d’entraînement: Elles offrent l’opportunité de pratiquer sans risques, d’expérimenter de nouvelles techniques et de renforcer sa maîtrise des mécanismes du jeu.

- Interactions en direct: Certaines plateformes proposent des fonctionnalités interactives comme le chat ou l’assistance virtuelle, facilitant la résolution de problèmes et l’apprentissage personnalisé.

| Fonctionnalité | Avantages |

|---|---|

| Sessions d’entraînement | Pratique libre, amélioration progressive |

| Statistiques en temps réel | Analyse détaillée, prise de décision éclairée |

| Tutoriels intégrés | Formation accélérée, compréhension approfondie |

| Support interactif | Assistance immédiate, conseils personnalisés |

Comparer différentes options de jeux gratuits pour optimiser son expérience de divertissement

Lorsqu’il s’agit de profiter des jeux en mode démo sur Instant Casino gratuit, il est essentiel de choisir la meilleure option pour maximiser le plaisir et l’efficacité de votre expérience. La variété de jeux disponibles permet à chaque joueur de trouver celui qui correspond parfaitement à ses préférences et à ses objectifs de divertissement.

Pour faire un choix éclairé, il est utile d’analyser les caractéristiques clés de chaque type de jeu, leurs avantages spécifiques ainsi que leur niveau de difficulté. Cela permet d’adapter votre sélection à votre style de jeu et d’améliorer ainsi votre confort et votre satisfaction globale.

Comparaison des options de jeux gratuits

| Type de jeu | Avantages | Inconvénients | Recommandé pour |

|---|---|---|---|

| Machines à sous | Facilité d’accès, grande variété, gains rapides | Dépendance potentielle, peu de stratégie | Débutants et joueurs recherchant du divertissement instantané |

| Jeux de table (roulette, blackjack, poker) | Stratégie et réflexion, simulation réaliste | Courbe d’apprentissage, plus complexes | Joueurs expérimentés ou ceux souhaitant tester des stratégies |

| Jeux de vidéo poker | Combinaison de machine à sous et de stratégie | Manipulation du jeu plus complexe | Joueurs intermédiaires cherchant équilibre entre chance et compétence |

| Jeux de grattage | Simplicité, instantanéité, amusement facile | Pas de stratégie ouverte, dépendance à la chance | Joueurs recherchant une expérience simple et rapide |

En résumé, pour optimiser votre divertissement, il est conseillé de varier les types de jeux selon votre humeur, votre niveau d’expérience et vos objectifs. Les machines à sous offrent une euphorie immédiate, tandis que les jeux de stratégie comme le blackjack permettent d’affiner ses compétences. En combinant ces options, vous pouvez enrichir votre expérience de jeu tout en minimisant les risques liés à la dépendance ou à l’ennui. Adaptez votre sélection pour tirer le meilleur parti des jeux gratuits sur Instant Casino et profitez d’un divertissement à la fois stimulant et sécurisé.

Albums | Top Tips for Smart Online Casino Play in 2026

Posted by Spice on January 13, 2026

Online casino players demand safety, variety and value. This guide breaks down the essentials for choosing the best casino site, optimizing your bankroll and enjoying top-rated slots and live dealer games. Whether you are a newcomer or a seasoned player, smart choices reduce risk and increase entertainment.

Start your research with a trusted review source and verify licensing and player protections directly on the site. For a quick reference point to a reputable operator, visit https://rippercasino1.net/ while you compare available bonuses, game libraries and payment options before registering.

Why Licensing and Security Matter

Regulation ensures fair play and clear dispute resolution. Look for licenses from recognized jurisdictions, SSL encryption, and independent audits. A licensed casino publishes test certificates and RTP summaries — proof that random number generators and game outcomes are independently verified.

Key Security Features to Check

- Valid gaming license visible on the footer

- 256-bit SSL encryption for transactions

- Two-factor authentication for accounts

- Transparent withdrawal and KYC policies

Choosing Games: RTP, Volatility and Variety

Selecting the right games depends on goals: steady returns or big jackpot swings. RTP (return to player) and volatility guide expectations. High RTP and low volatility favor longer sessions; high volatility and progressive jackpots offer rare big wins.

Game Categories at a Glance

| Game Type | Typical RTP | Recommended For |

|---|---|---|

| Online Slots | 92%–98% | Casual play, bonus features |

| Table Games | 95%–99% | Strategic play, lower house edge |

| Live Dealer | 95%–99% | Real-time interaction, higher immersion |

| Progressive Jackpots | Varies widely | Chance of very large wins |

Bankroll Management and Betting Strategy

Budgeting is the single most effective way to extend play and reduce losses. Set session limits, use only disposable entertainment funds and avoid chasing losses. Adjust bet size to the game’s volatility and your bankroll: smaller bets for high volatility, larger bets for consistent low-volatility games.

Practical Betting Rules

- Never stake more than 1–2% of your bankroll per spin or hand

- Use loss limits and cool-off periods built into the casino account

- Claim bonuses only when wagering terms suit your style

- Track wins and losses to refine strategy over time

Bonuses, Wagering and Value Calculation

Bonuses boost playtime but carry wagering requirements. Compare welcome offers by effective value: bonus amount, free spins, wagering multiplier and maximum bet rules. A 100% match with x20 wagering on a low-cap bonus may be less valuable than a smaller bonus with fairer terms.

Quick Bonus Checklist

- Read wagering requirements and excluded games

- Note contribution rates for slots vs table games

- Check expiry periods and max withdrawal caps

Payment Options and Speed

Fast, low-fee payment methods improve convenience. Prioritize casinos that support reputable e-wallets, cards and bank transfers, and check processing times for deposits and withdrawals. Crypto options may offer speed and privacy but verify legal acceptance in your jurisdiction.

Responsible Play and Support

Responsible gaming tools protect players. Set deposit, session and loss limits and use reality checks. If gambling causes distress, contact self-exclusion services or support organizations. A reputable casino provides easy access to responsible play tools and third-party help links.

By combining verified security, smart bankroll rules, careful bonus selection and a focus on higher-RTP games, you build an enjoyable and sustainable approach to online casinos. Use the checklists and table above to compare sites and craft a personalized plan that suits your goals and entertainment budget.

Albums | Regulated Prediction Markets, Political Bets, and the Practical Side of Logging into Kalshi

Posted by Spice on January 4, 2026

Whoa! I was halfway through an email thread the other day when a friend asked me: “Is it okay to bet on election outcomes on those regulated sites?” Short answer: yes, but with layers. My instinct said there was an easy yes/no, but actually, wait—it’s messier than that. Initially I thought the conversation would be a quick primer on market odds and voter models. Then I remembered how often regulation, product design, and user experience collide in surprising ways.

Here’s the thing. Regulated trading venues are fundamentally different from the wild west of unregulated prediction forums. They carry legal guardrails, liquidity rules, and surveillance that aim to reduce fraud and market manipulation. That matters a lot when politics are the underlying event, because stakes aren’t only monetary; they’re reputational, legal, and sometimes systemic. On the other hand, these platforms also introduce friction—verification steps, KYC, and limits—that change how retail traders engage.

Something felt off about easy comparisons. Comparing a regulated exchange to a social betting app is like comparing a brokerage to a group chat. They might both show prices, but the incentives and constraints are different. For people who want to use prediction markets to learn or hedge, those differences matter. For people who are curious about political predictions specifically, regulated venues offer a space that is designed to be auditable and that can, in theory, withstand legal scrutiny. I’m biased toward transparency, though—so that part appeals to me.

Practically speaking, if you’re thinking about political event contracts you should consider three things: market design, counterparty risk, and operational security. Market design affects how information is aggregated. Counterparty risk tells you who bears the other side of your trade. Operational security governs whether your account stays yours. On one hand you want open and liquid markets. On the other hand, too much openness without guardrails invites manipulation. Hmm… there are trade-offs.

Why regulation matters — short primer and a login note

Regulation, mainly by the CFTC in the U.S., means exchanges must monitor trading for fraud and maintain certain protections. That creates costs and operational practices that show up as login hurdles and identity checks. For newcomers, those checks can feel annoying. Really? Yes. But they also help keep the market solvent and trusted.

If you’re trying to get into a regulated platform like Kalshi, start with the official site for onboarding requirements. You can find direct guidance and support at https://sites.google.com/walletcryptoextension.com/kalshi-official/ —that page consolidates basic links and pointers for first-time users, including verification expectations and where to ask questions. I’ll be honest: documentation moves slowly sometimes, and customer support responses can be variable. Still, the official guidance is the right place to begin.

Logins on regulated exchanges tend to be stricter. Two-factor authentication is common. Identity verification is common. You will often be asked to provide photo ID and proof of address. That feels invasive to some, somethin’ like a necessary evil to trade there. But the benefit is that if markets involve politically sensitive events, those identity measures create an audit trail that regulators can examine if problems arise. On the flip side, this same trail means you shouldn’t reuse weak passwords or ignore security best practices.

From a trader’s point of view the most immediate frictions are: waiting for KYC approval, having orders routed to specific clearing arrangements, and limits on contract sizes during thin liquidity. Those factors can make political contract pricing jumpy and sometimes less predictive than you’d hope the moment news breaks. Initially I thought that regulated prices would be smoothly efficient. Though actually, in practice, efficiency is limited by participation and operational cadence.

Here’s what I do and tell people I mentor. First, separate your curiosity from capital. Use a small test allocation to learn market microstructure and fee schedules. Second, document your positions. Really track them, because event resolution details can be nuanced. Third, protect your login: use a password manager, enable MFA, and treat support emails with skepticism. Phishing attempts target both regulated and unregulated traders, and they get creative. Seriously?

There’s also an emotional dimension. Betting on political outcomes feels different than trading equities or weather contracts. It triggers bias and confirmation-seeking behaviors in even experienced traders. My gut reaction when a volatility spike hits a political market is often wrong. On one hand I want to trade that spike because it feels informative. On the other hand it may be noise amplified by a single news item that won’t change fundamentals. So I step back, reassess my priors, and only then act.

Market design notes: how political contracts differ

Political event contracts often use binary outcomes—yes or no—to a specific question. The precision of the contract language matters. If a resolution is ambiguous, disputes can drag on and create settlement uncertainty. That is very very important. Clear wording and predefined settlement criteria reduce the risk of contested outcomes and regulatory headaches.

Liquidity is another issue. Political events can attract sudden interest that dries up as attention shifts. Market makers sometimes provide depth, but regulated venues keep controls that can limit leverage or suspend trading in extraordinary situations. Those controls can be frustrating to active traders, but they exist to preserve market integrity and avoid cascading failures.

On a practical note, keep an eye on the terms page and the event FAQ before you trade. If the contract resolution refers to a specific news outlet, a government source, or a calendar date, make sure you understand exactly what evidence the exchange will accept. It sounds nitpicky, but it’s the detail that decides whether a trade pays off.

Common questions traders ask

Can I treat political contracts like polls?

Short answer: not exactly. Polls are a data input, but market prices reflect the beliefs of people willing to put money on the line. Markets incorporate polls, but also incorporate tradeable information, hedging flows, and risk premia. Use polls as context, not as the sole decision engine.

Are regulated platforms safer to use?

Safer in terms of legal oversight and audit trails, yes. But every platform still requires you to manage personal security. Regulation reduces certain risks but does not eliminate phishing, account takeovers, or operational outages.

What if an event’s wording is unclear at settlement?

Exchanges usually have dispute processes and arbitration. That means settlement can be delayed while the exchange adjudicates evidence. Expect slower resolution timelines in contested political contracts.